Dispelling DeepSeek Myths, Studying V3

Remember Jan 27th when Nvidia lost half a trillion dollars in market cap?

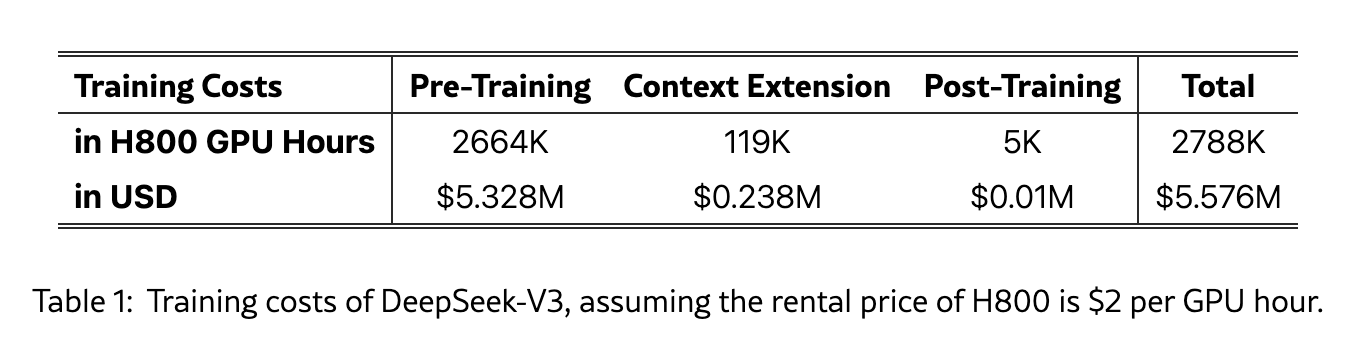

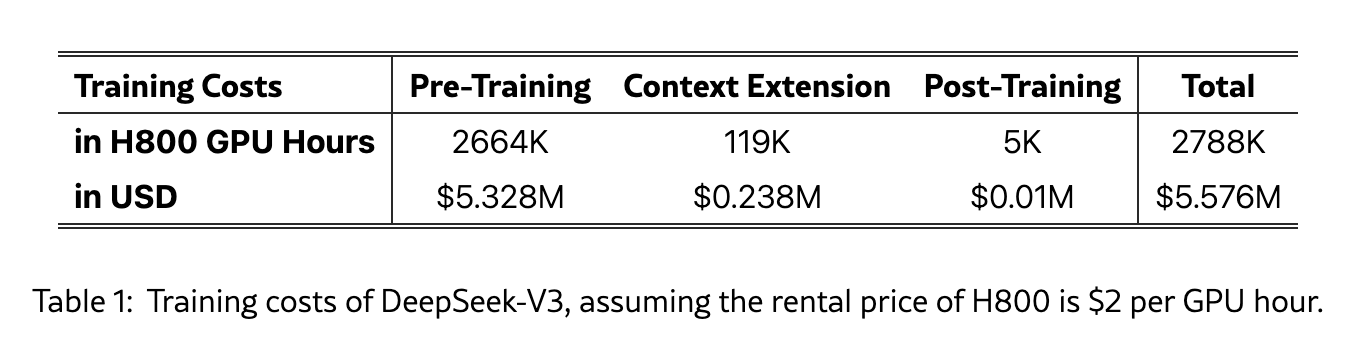

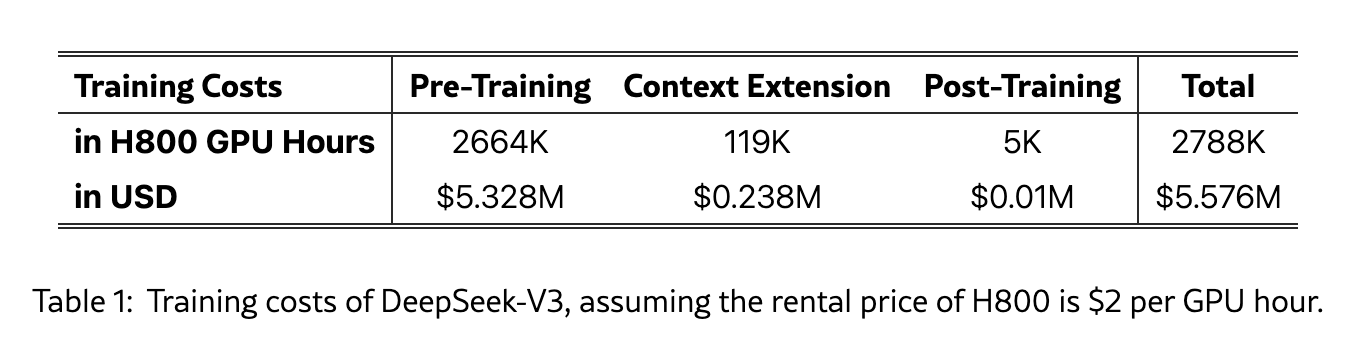

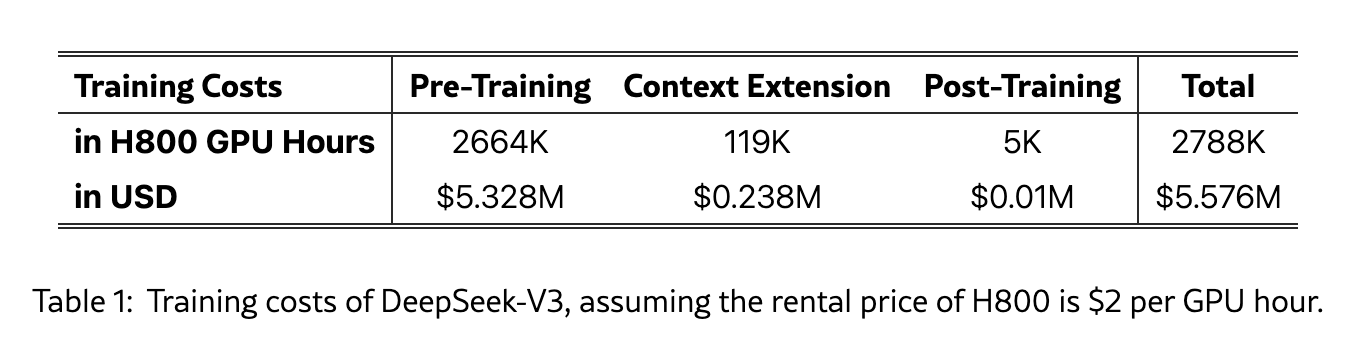

An inconspicuous table buried in a paper played a key role:

What did this paper actually say?

Welcome to the third and final post in a series looking at DeepSeek.

Let’s think things through from first principles. We can talk about misplaced investor panic to start, but then we’ll get technical and look at what the paper showed and draw our own conclusions.

V3 Training Cost

At the MIT event, Altman was asked if training GPT-4 cost $100 million; he replied, “It’s more than that.” Source

Anthropic CEO Dario Amodei: “Right now [the cost to train is] $100 million, and there are models in training that are more like $1 billion. Source

DeepSeek’s $5.6M training cost stoked investor panic; how could a competitive foundation model be trained for roughly 5% of the cost quoted by Sam Altman and Dario Amodei?

Apples to Oranges

The first (seemingly obvious) observation is that Altman and Amodei didn’t explain their math.

Was Altman talking about the compute cost for the final training run alone? For example, 30K GPUs at $3/hr for 50 straight training days would cost $108M.

Maybe it was an all-in cost, like compute cost of multiple training runs (final run is preceded by smaller experiments, failed experiments, post-training, etc), data costs (acquisition, labeling, storage), and employee costs (salaries).

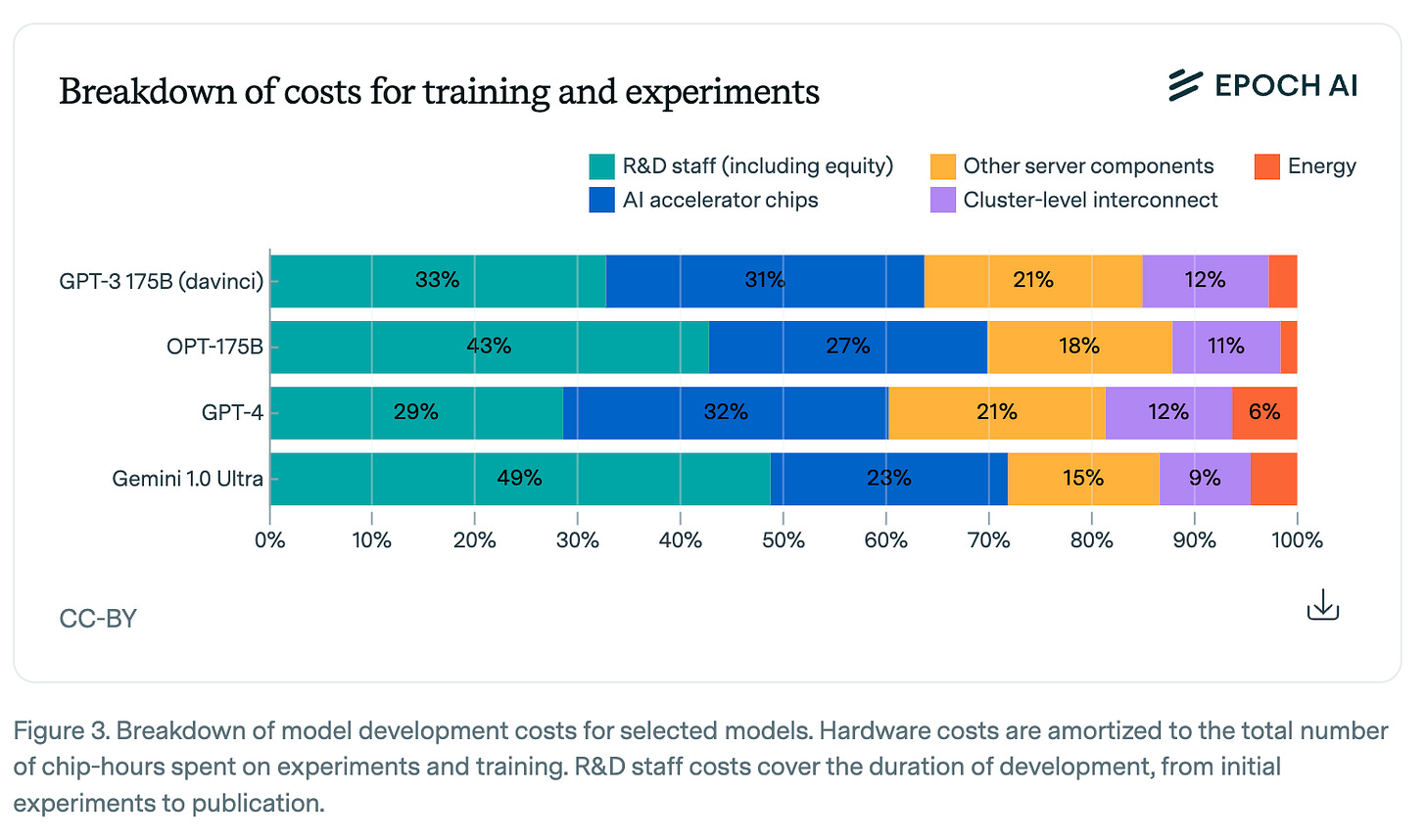

After all, the folks at EpochAI believe R&D staff costs alone could account for 30% to 50% of total training costs:

So, do AI labs spend more on compute than on staff? Our cost breakdown suggests this is the case, but staff costs are very significant. The combined cost of AI accelerator chips, other server components and interconnect hardware makes up 47–67% of the total, whereas R&D staff costs are 29–49% (including equity). Energy consumption makes up the remaining 2–6% of the cost.

We don’t know what Altman and Amodei included in their training cost figures.

What we do know: DeepSeek shared the compute cost for the final training run alone. Nothing more, nothing less. Have a look again:

Investors wrongly compared DeepSeek’s number with American labs; it was an apples-to-oranges comparison.

I can genuinely understand why American labs would talk in broad strokes and probably about all-in costs; likewise, I appreciate why a hamstrung AI lab would share only the final training run costs.

Let’s talk incentives. The CEOs of VC-backed American AI labs benefit from citing the largest plausible training cost. After all, it can scare off competition, is useful for recruiting, and supports their high valuation and need for more capital. Hey Satya, we’re gonna need you to keep writing those big checks…

On the other hand, a self-funded Chinese AI lab with restricted access to compute is incentivized to discussed the smallest number possible. Hey prospective engineers, check our models are competitive because of our brains, not our brawn!

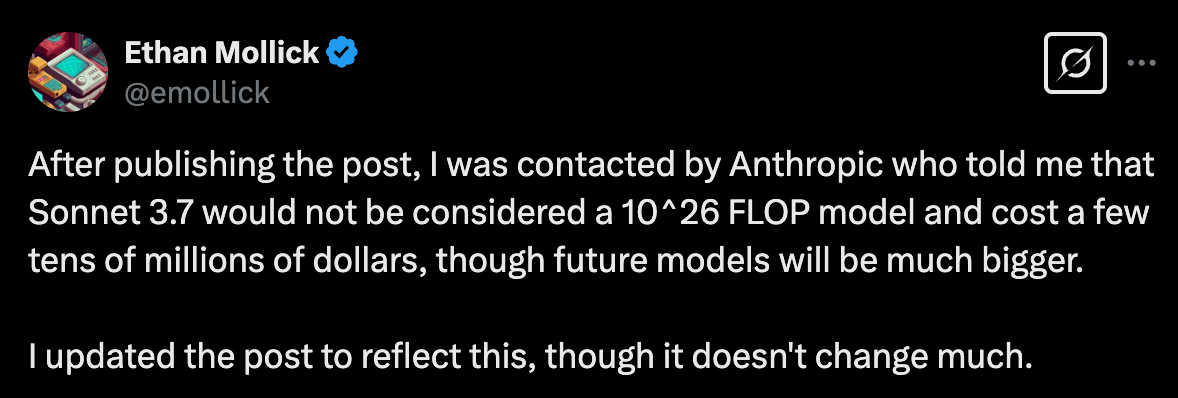

Anthropic is now responding with apples-to-apples numbers to help reframe the narrative. Amodei wrote

I can only speak for Anthropic, but Claude 3.5 Sonnet is a mid-sized model that cost a few $10M’s to train (I won’t give an exact number)

And Anthropic later said Claude Sonnet 3.7 also cost only “tens of millions” to train.

Tens of millions is still a lot more than $5.6M.

V3 from First Principles

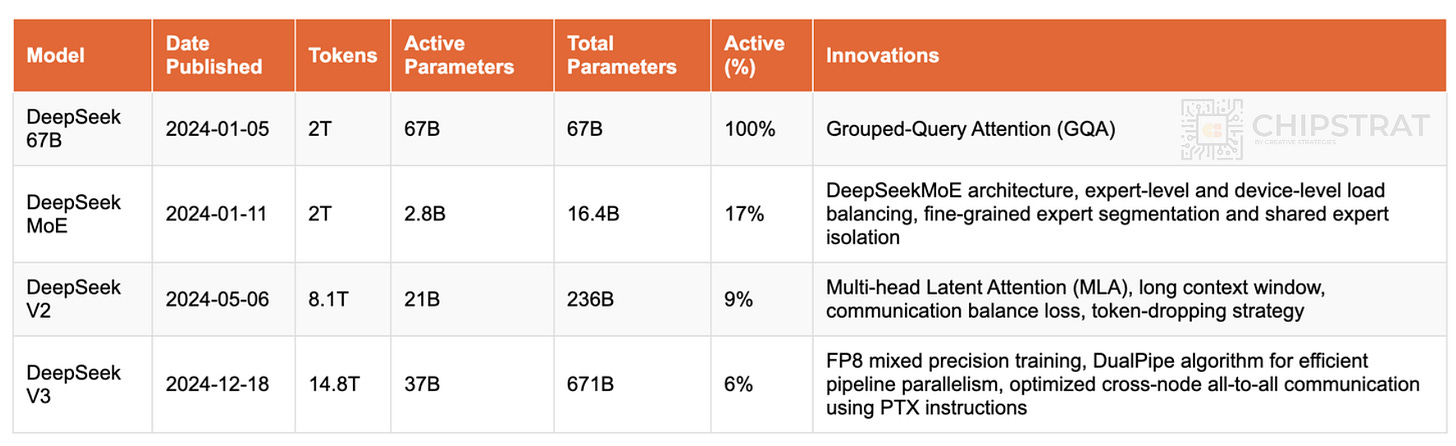

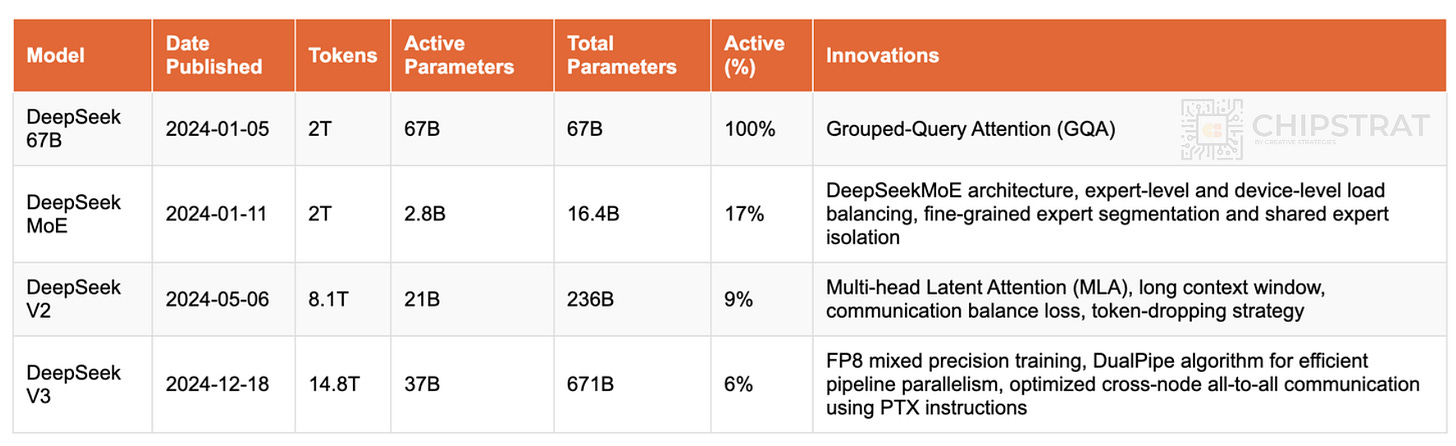

Recall from our previous article that DeepSeek has been incrementally improving for over a year now:

V3 follows the same playbook of communication and compute optimiztions.

First, we’ll explore reduced precision (FP8) during training and communication optimizations. This will get technical, but it’s worth it. Then, we’ll draw big-picture conclusions based on everything we’ve learned.

FP8 Training

From the DeepSeek V3 Technical Report:

We design an FP8 mixed precision training framework and, for the first time, validate the feasibility and effectiveness of FP8 training on an extremely large-scale model.

Precision & Quantization

Some context for newer folks.

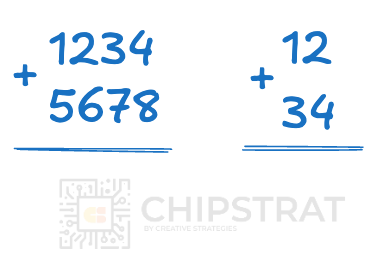

Precision refers to the number of bits used to store and compute values. FP32 (single-precision floating point) uses 32 bits, while FP16 (half-precision) uses only 16 bits. At a high-level, fewer bits means faster math. Recall elementary school and how it’s faster to add two digits numbers by hand than it would be to add four digit numbers.

It’s a bit more nuanced in practice, but conceptually, lower precision is faster math and less data to store in memory.

Quantization is the process of reducing the numerical precision of an AI model (ex: FP32 → FP8) to reduce the memory needed and speed up inference.

The tradeoff of lower precision is slightly worse accuracy, but methods like post-training quantization and quantization-aware training help minimize these drawbacks.

Much work has been done to introduce lower precision into inference, but DeepSeek is one of the first to introduce it into training.

Mixed Precision

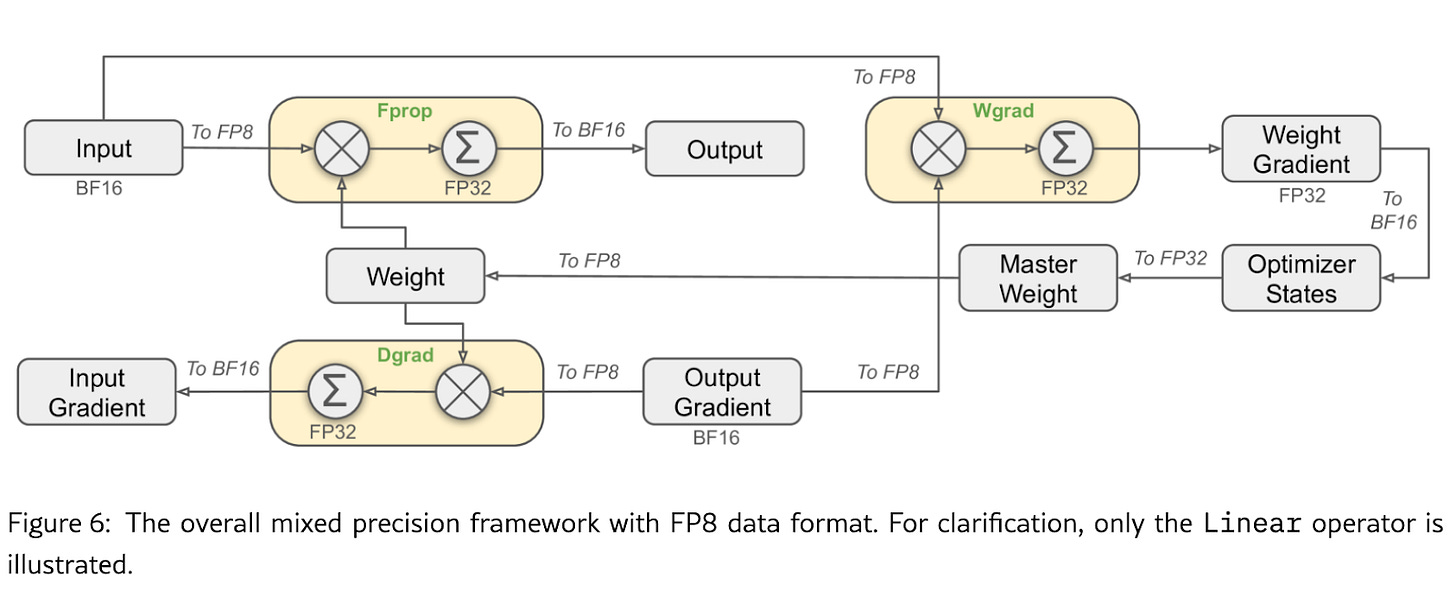

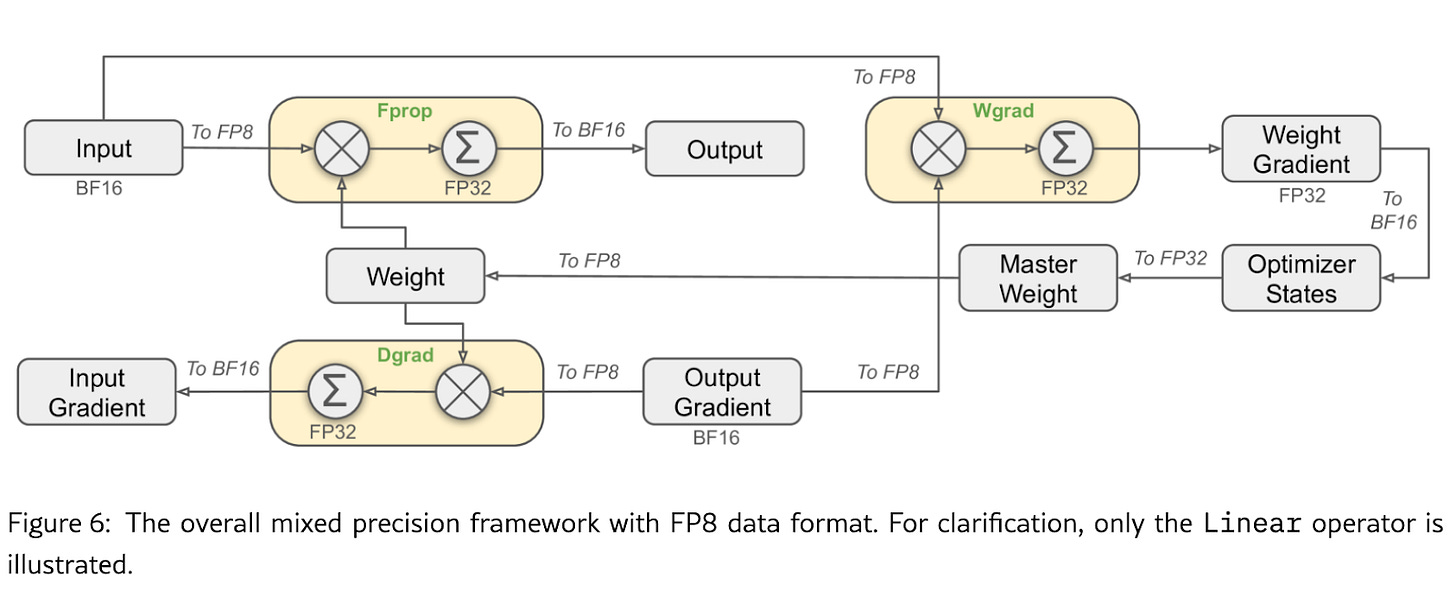

Specifically, DeepSeek introduces FP8 into a mixed-precision setup. Mixed precision means using a few different precisions:

Ideally, low-precision formats like FP8 would be used at every step of the training workflow. However, given their lower precision, rounding errors or the loss of small gradient values can degrade model performance or even cause training to diverge. Divergence means the model fails to learn properly; training fails to converge to a meaningful solution.

This is why mixed precision is the answer, which uses more precision when necessary to avoid these issues but lower precision everywhere else.

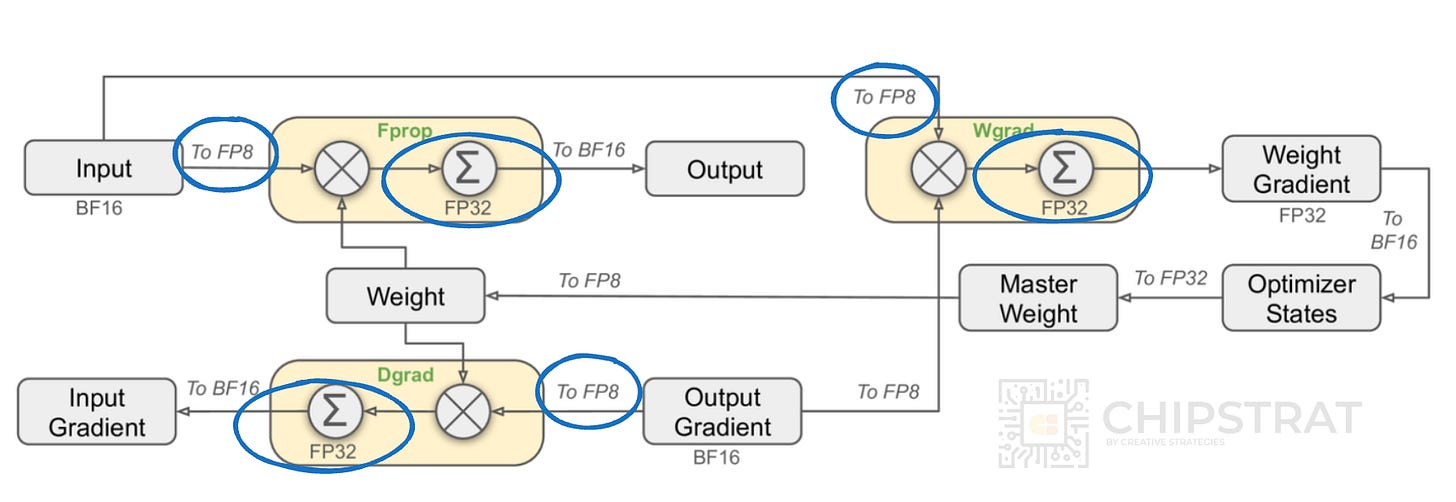

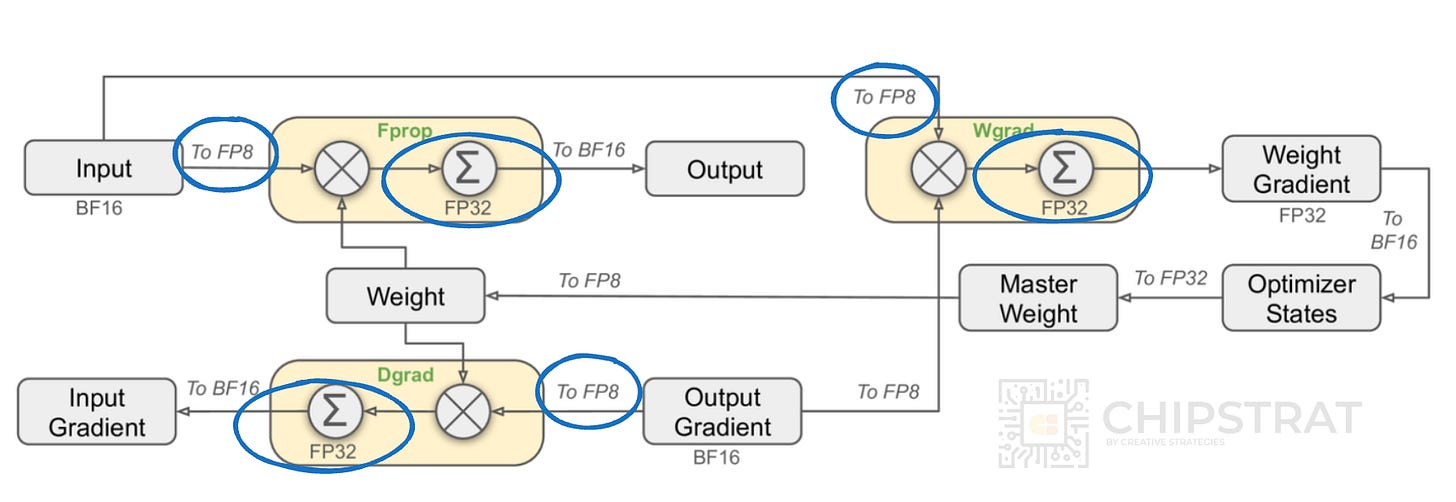

To maintain accuracy despite using FP8 for speed, DeepSeek keeps master weights and accumulates gradients in FP32. This prevents tiny updates from being lost due to rounding errors. There are always tradeoffs though; storing FP32 master weights and converting formats adds memory and compute overhead.

So, if we look again at DeepSeek’s diagram, we can see places where DeepSeek converted to FP8 for efficient computation while accumulating in FP32 to maintain numerical precision.

The actual implementation details aren’t trivial. We needn’t know the details, but the paper describes topics DeepSeek introduced such as fine-grained quantization, promoting accumulation to CUDA Cores for higher precision, using E4M3 format instead of EM52 for Dgrad and Wgrad, and more.

DualPipe Algorithm and Computation-Communication Overlap

DeepSeek’s low training cost? Simple: fewer total GPU hours. And that comes down to cutting idle time. One of the biggest sources of inefficiency? Data movement. V3 optimized communication pipelines to make sure GPUs weren’t left waiting.

To understand how they achieved this, we need to examine how DeepSeek split the model across GPUs and managed the communication.

First, we see DeepSeek using pipeline, expert, and data parallelism:

The training of DeepSeek-V3 is supported by the HAI-LLM framework, an efficient and lightweight training framework crafted by our engineers from the ground up. On the whole, DeepSeek-V3 applies 16-way Pipeline Parallelism (PP), 64-way Expert Parallelism (EP) spanning 8 nodes, and ZeRO-1 Data Parallelism (DP)

These approaches help split the neural network into smaller components that can be computed in parallel during training.

Pipeline Parallelism

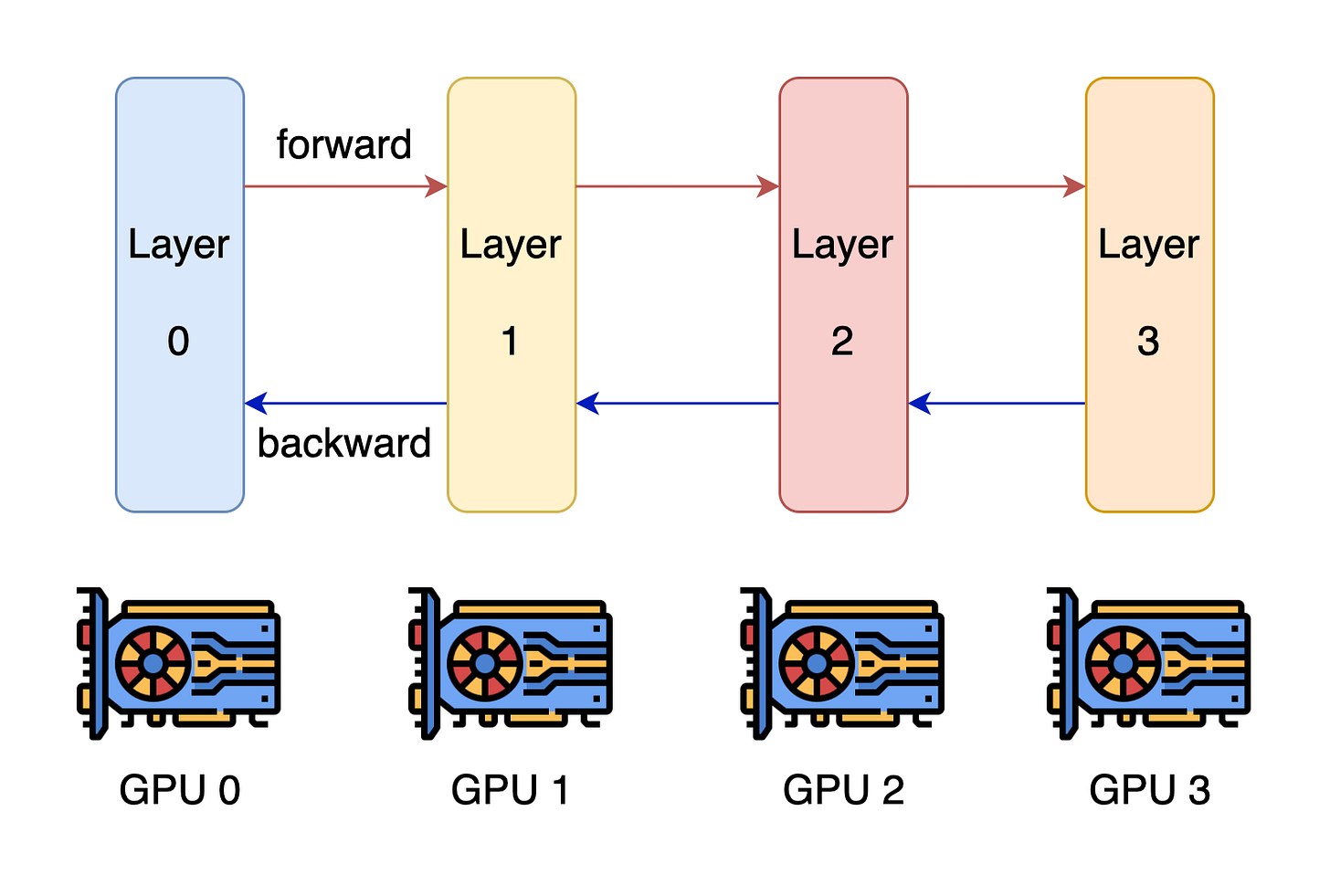

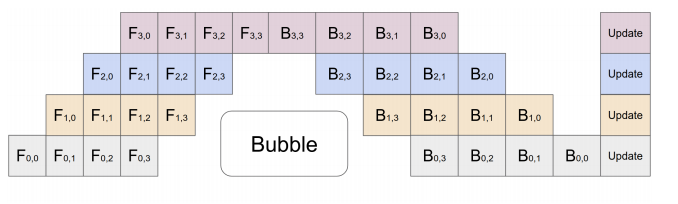

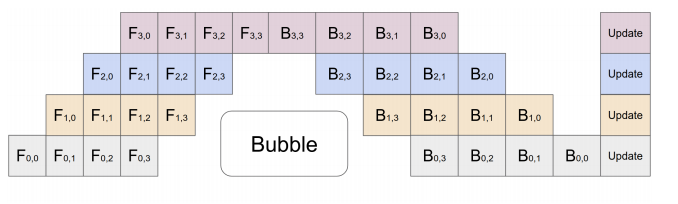

Pipeline parallelism has each GPU tackle one layer of the neural network:

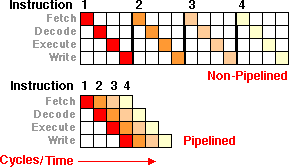

This allows for concurrent processing, where GPUs work in a sequence like an assembly line. Data moves forward through the layers across GPUs (0→1→2→3), and then the results move backward (3→2→1→0). It’s like basic computer architecture instruction pipelining for anyone familiar:

However, since the work flows downstream and then back upstream, some of the assembly line workers sit idle. These are pipeline stalls or pipeline bubbles.

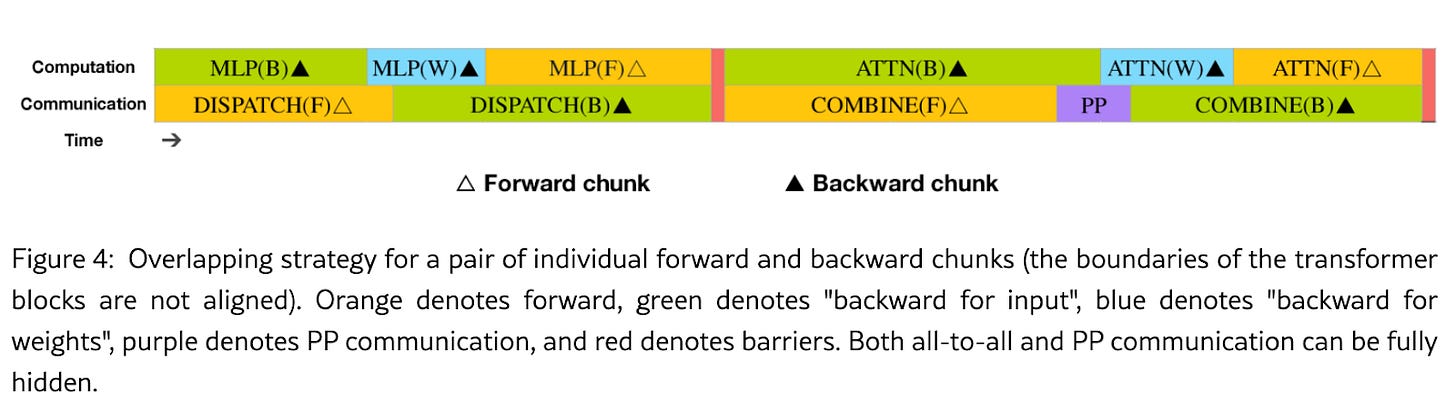

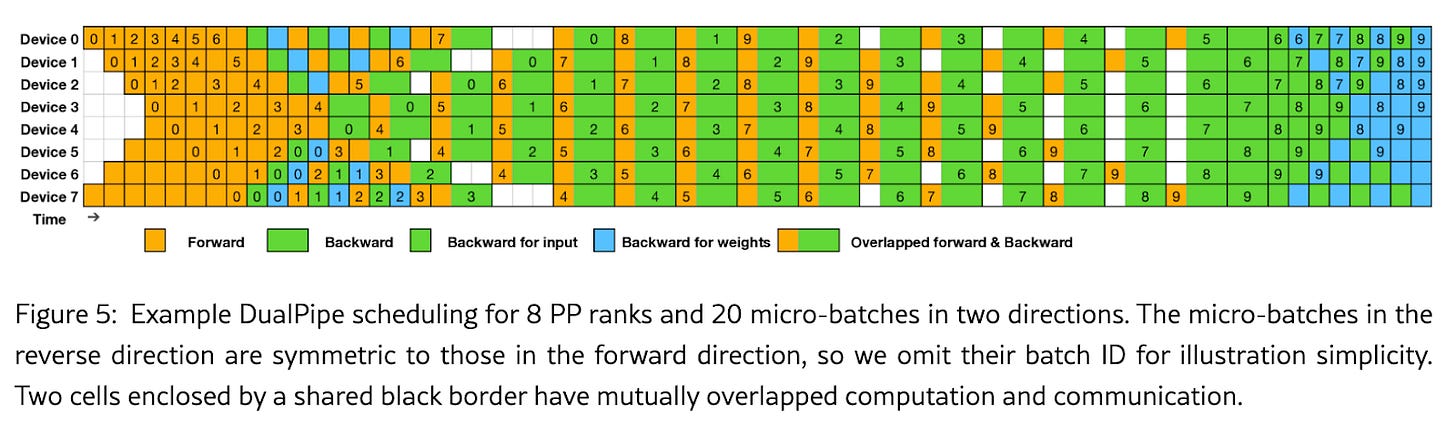

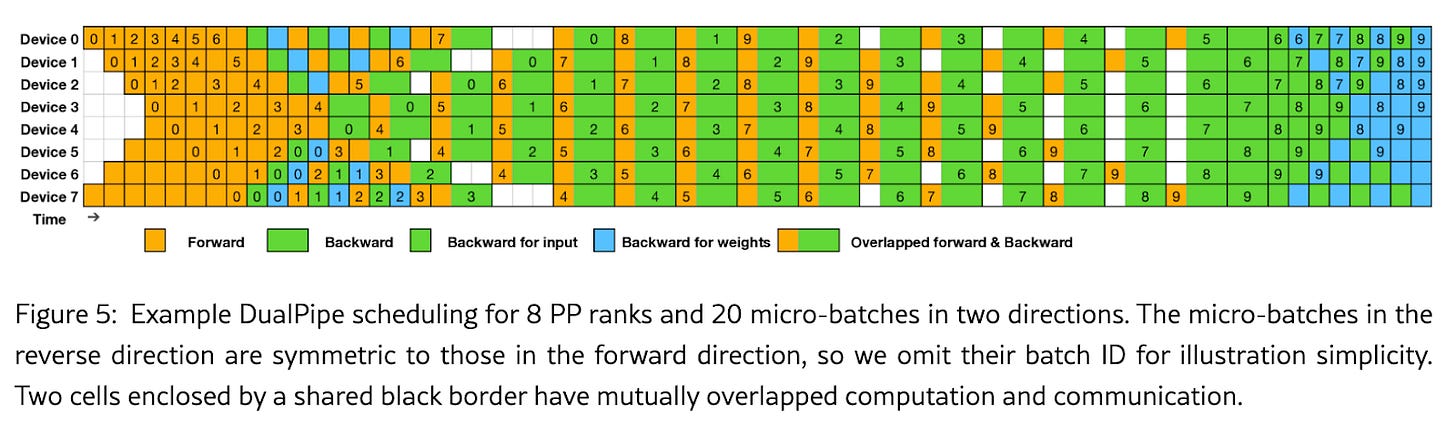

DeepSeek introduced a “DualPipe” algorithm intended to reduce the amount of bubbles and GPU idle time:

In order to facilitate efficient training of DeepSeek-V3, we implement meticulous engineering optimizations. Firstly, we design the DualPipe algorithm for efficient pipeline parallelism. Compared with existing PP methods, DualPipe has fewer pipeline bubbles.

Expert Parallelism Too

DeepSeek uses both pipeline and expert parallelism. Instead of a GPU handling an entire layer, like in basic pipeline parallelism, it only processes a specific “expert” within that layer.

So, GPU 0 doesn’t get all of layer 0; it gets just layer 0 of a particular expert.

This combination allows for very fine-grained distribution of the model across GPUs, but also requires lots of communication to share these updates across the network accordingly.

Don’t Forget Data Parallelism

DeepSeek also uses data parallelism, which means each GPU sees a different part of the training data.

With expert parallelism AND pipeline parallelism AND data parallelism, GPU 0 is responsible for processing only a subset of experts within layer 0 on a subset of training data.

Pop the Bubbles

DeepSeek’s DualPipe tries to schedule all computation so that it reduces the pipeline bubbles across its 2048 GPU system.

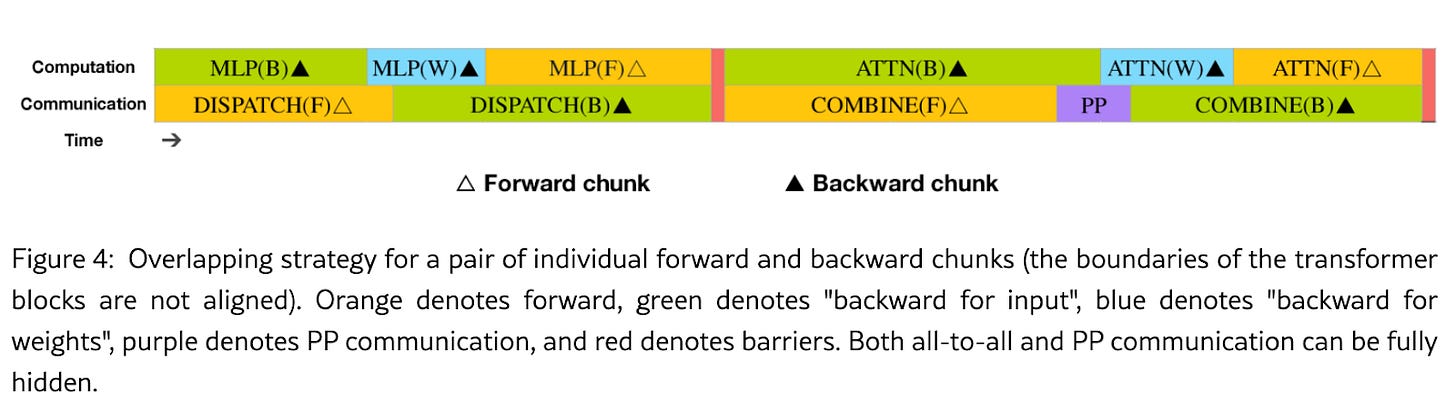

We design an innovative pipeline parallelism algorithm called DualPipe, which not only accelerates model training by effectively overlapping forward and backward computation-communication phases, but also reduces the pipeline bubbles.

V3 also minimizes idle time by employing a Tetris-like scheduling strategy to ensure GPUs can request and process data simultaneously. Don’t sweat the details, just know the data is there when the GPU needs it!

All of this effort works! Here’s a nice illustration from the paper demonstrating the reduction in bubbles (white cells) from scheduling computation and communication carefully.

Efficient Cross-Node All-to-All Communication

The DeepSeek team was very thoughtful about their communication efficiency.

When they must send data, they try to send it the fastest route possible.

A Quick Communication Refresher

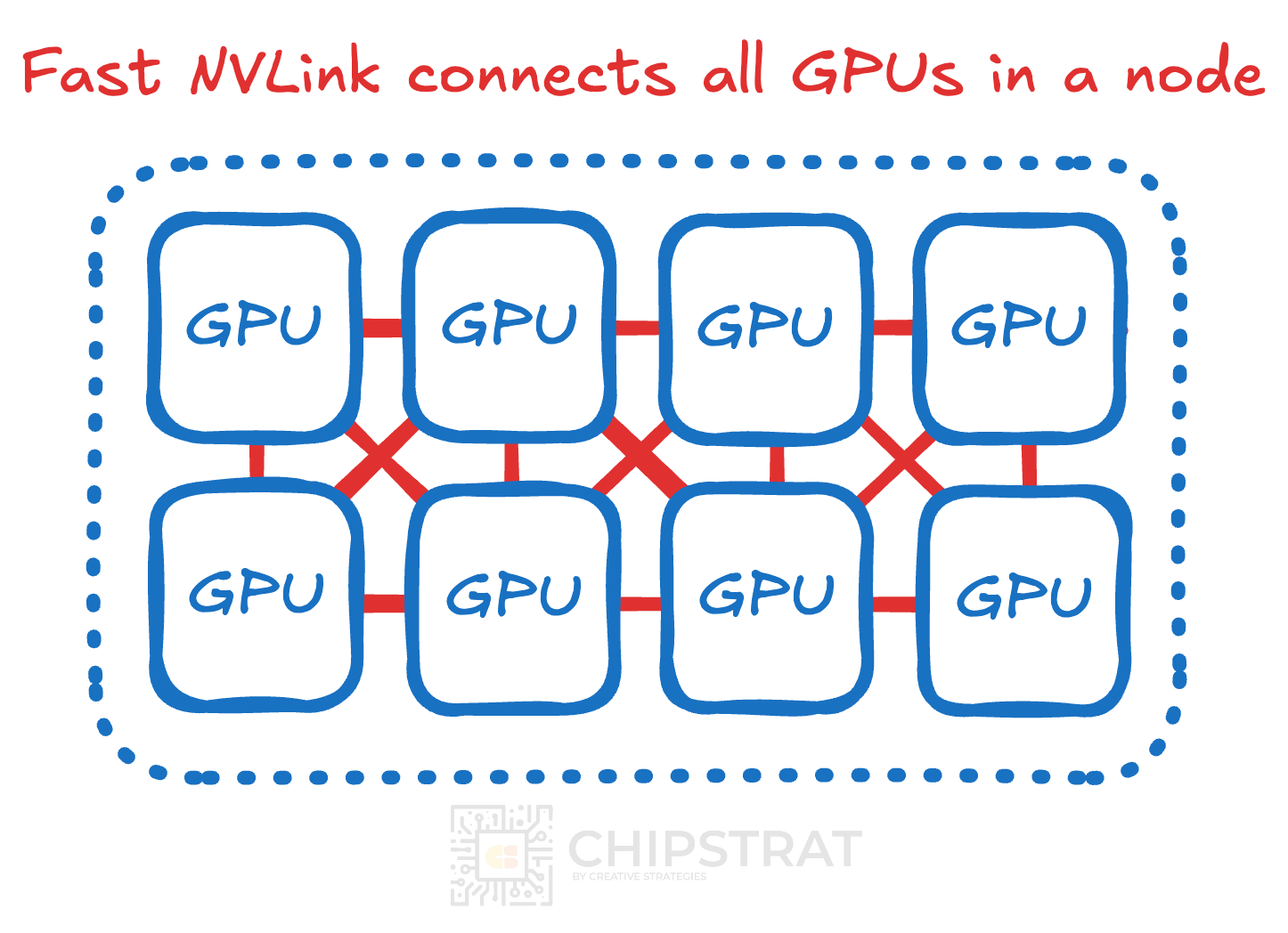

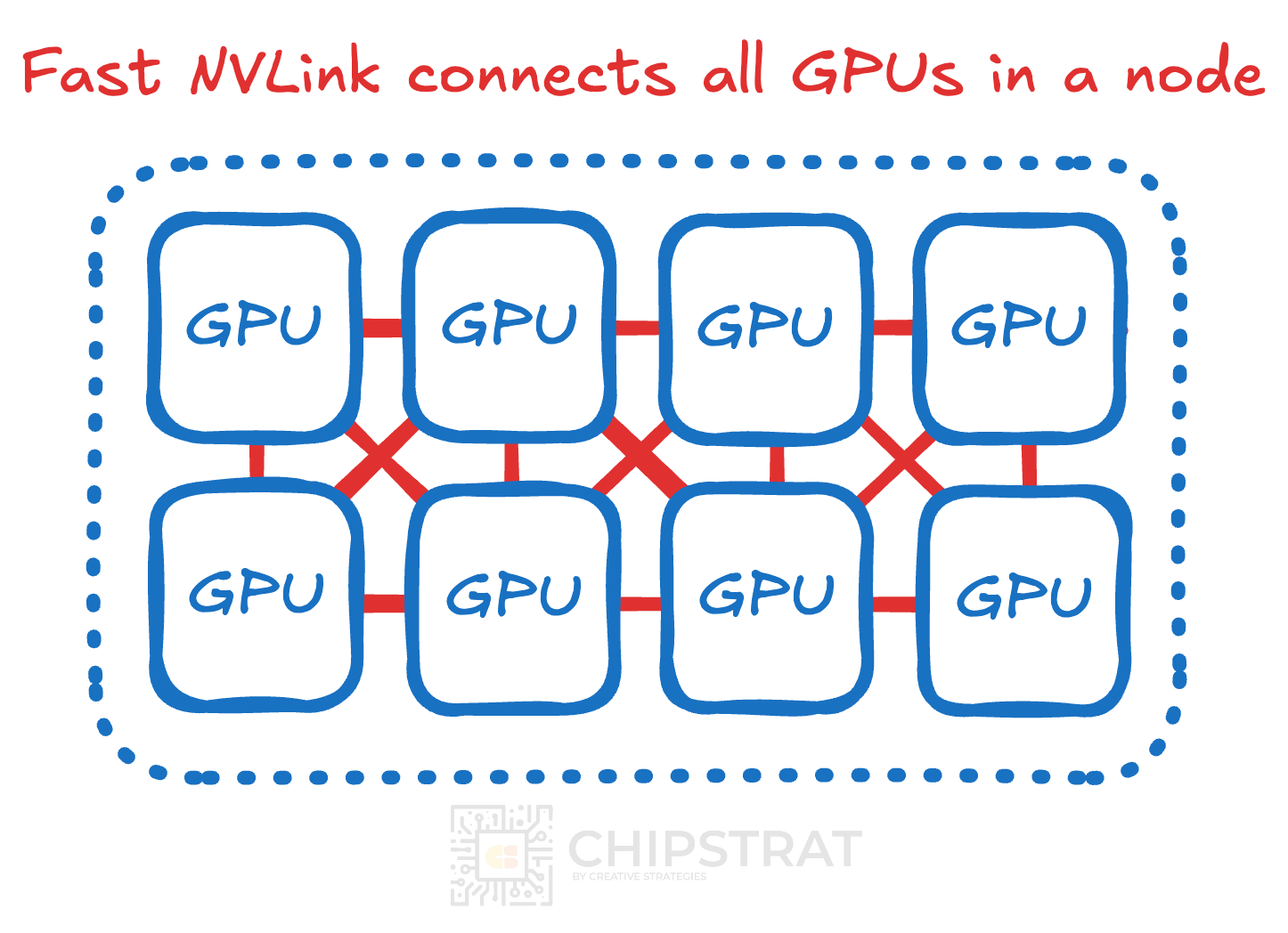

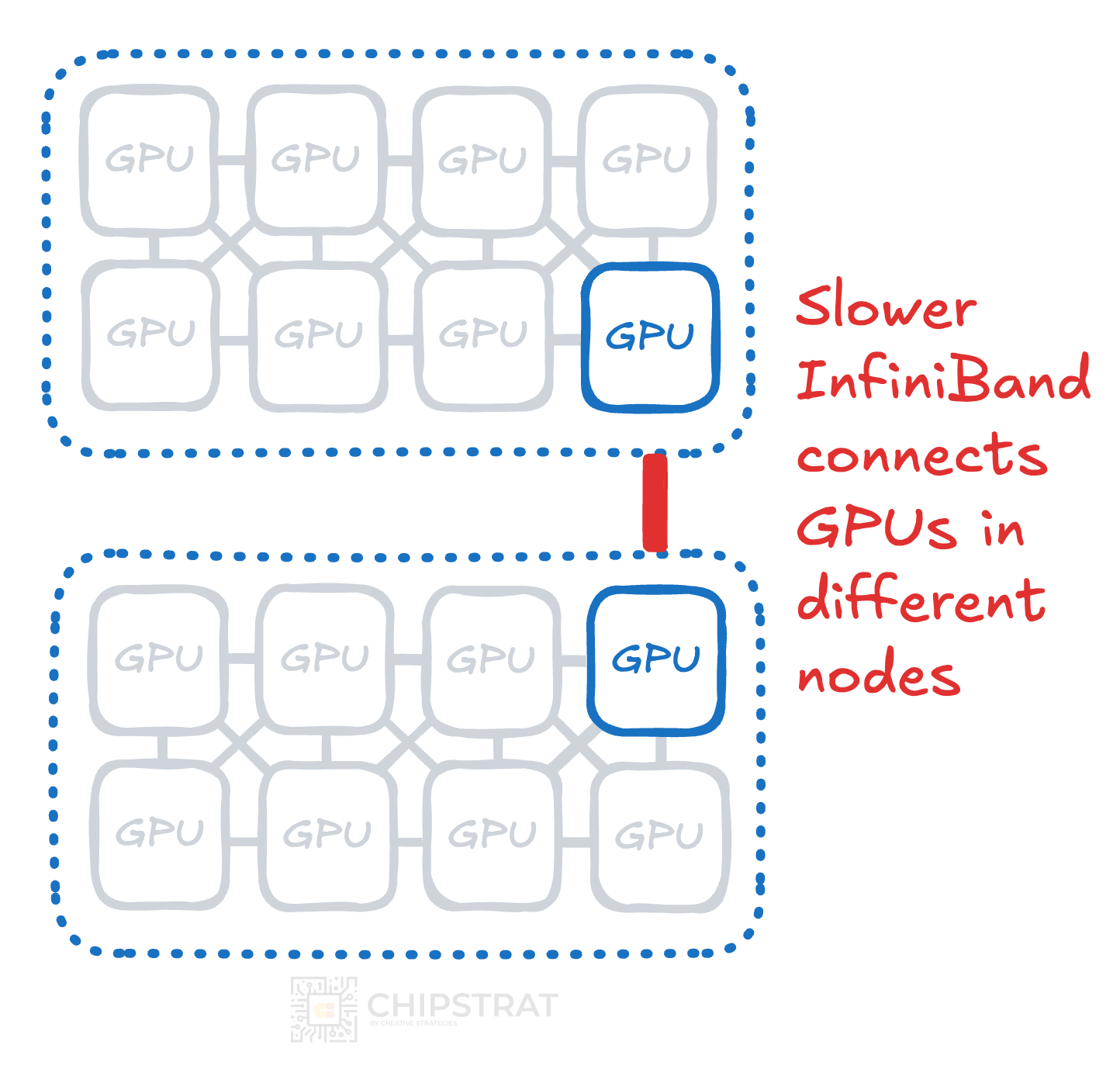

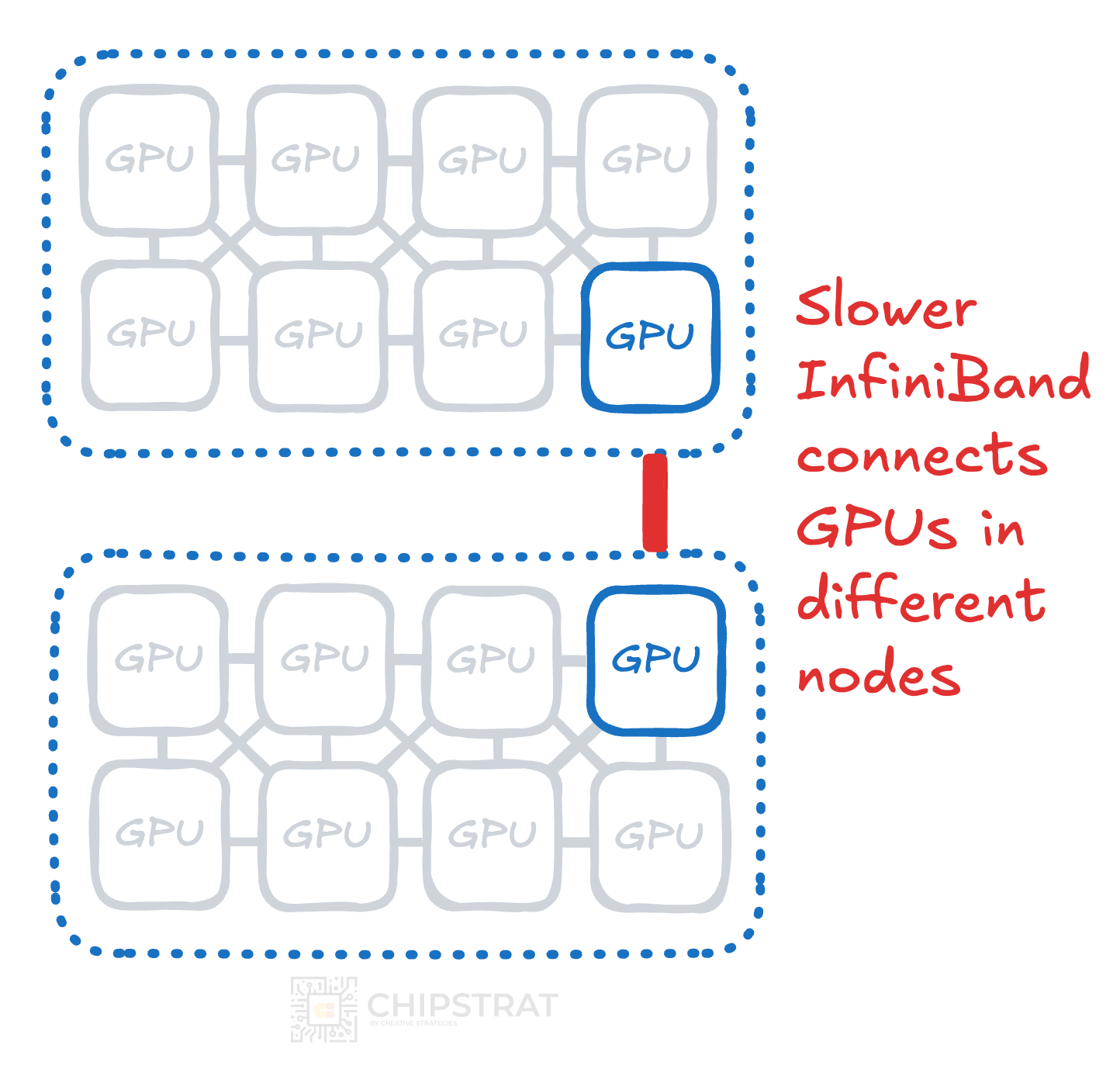

DeepSeek reminds us that NVLink is faster than Infiniband.

DeepSeek-V3 is trained on a cluster equipped with 2048 NVIDIA H800 GPUs. Each node in the H800 cluster contains 8 GPUs connected by NVLink and NVSwitch within nodes. Across different nodes, InfiniBand (IB) interconnects are utilized to facilitate communications

To be specific, in our cluster, cross-node GPUs are fully interconnected with IB, and intra-node communications are handled via NVLink. NVLink offers a bandwidth of 160 GB/s, roughly 3.2 times that of IB (50 GB/s).

Note: DeepSeek mentions an NVLink bandwidth of 160 GB/s, much lower than the reported H800 (constrained) bandwidth of 400 GB/s. I’m assuming this is a “real-world” measurement vs theoretical peak, but not sure?

DeepSeek’s setup results in a high volume of GPU communication. Intranode NVLink communication is fast:

But Infiniband internode communication is slower:

The goal is straightforward: can most communication occur on the fast NVLink channels and not slower Infiniband?

Simultaneously, DeepSeek sought to reduce the amount of compute cores needed to manage all this communication coordination.

A Quick Computation Refresher

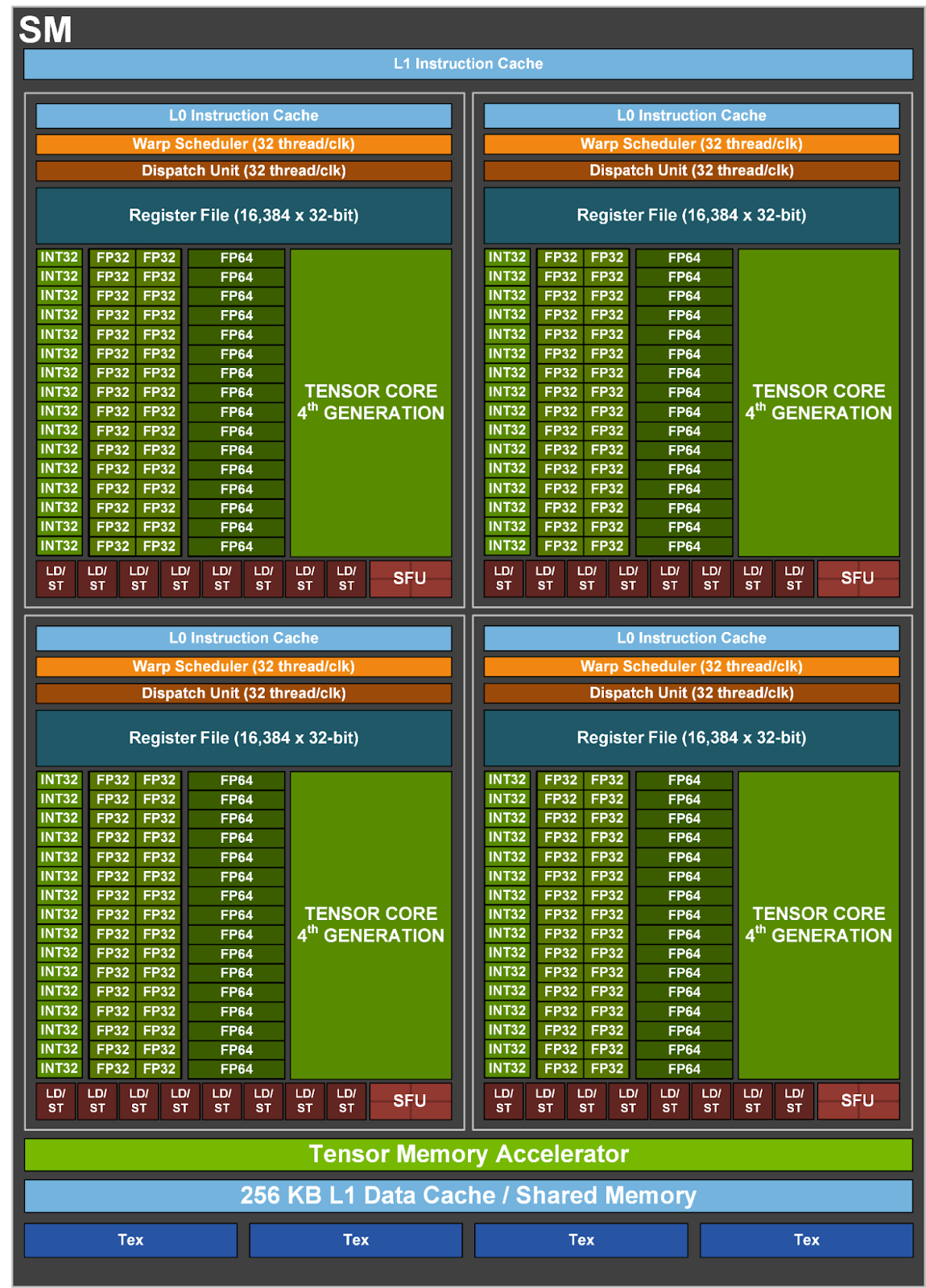

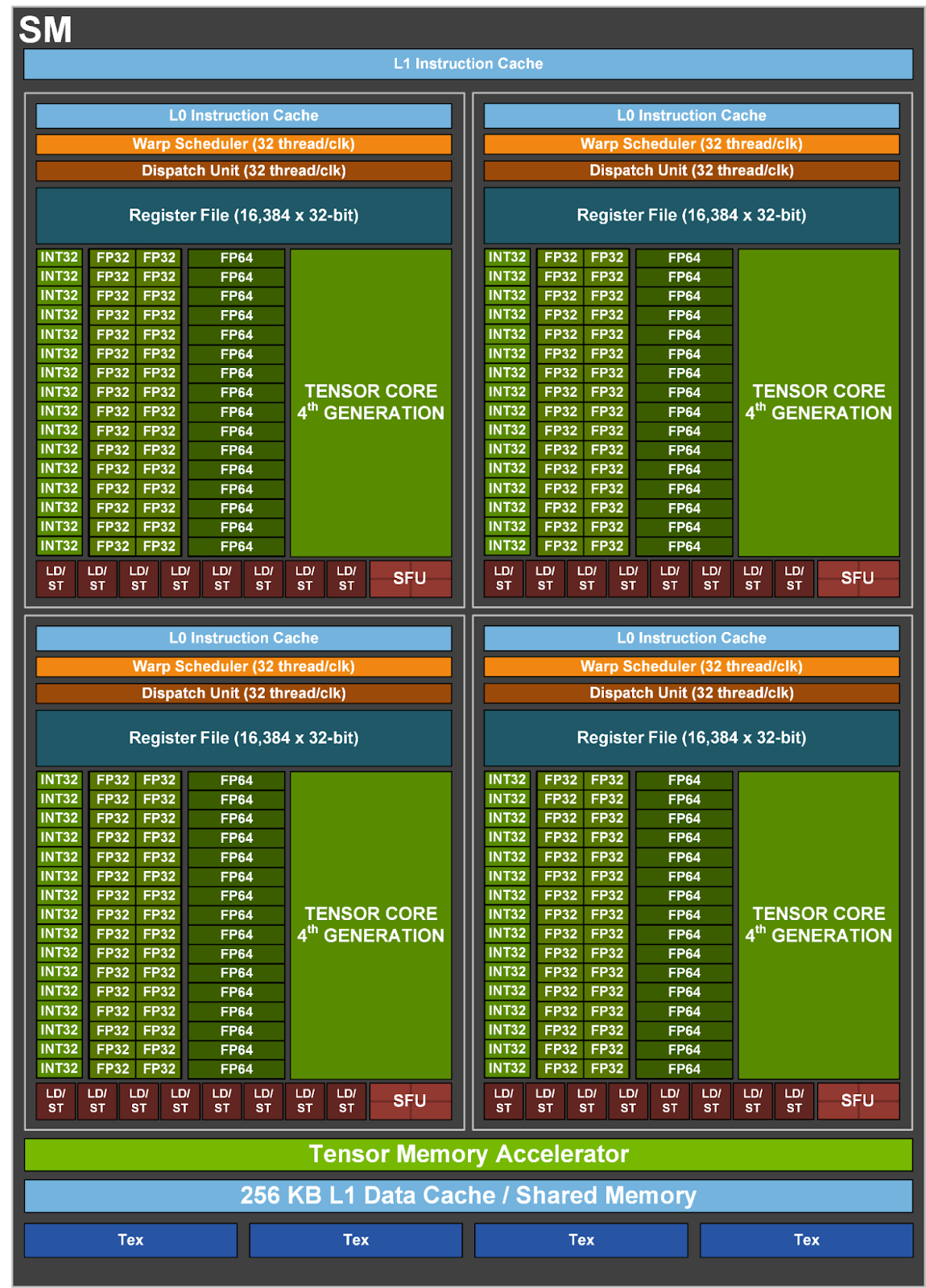

Nvidia GPUs have 100+ Streaming Multiprocessors (SMs), which you can loosely think of as a “GPU core.” Similar to a CPU core, an SM has its own instruction control, registers and memory, and compute units. The GPU SM has tensor cores full of specialized matrix multiplication circuitry.

All those tensor cores sit idle when the SM is just scheduling communication

Communication Efficiency

Hence, DeepSeek-V3 aims to limit the number of SMs needed to handle communication so that more SMs can focus on computation.

We customize efficient cross-node all-to-all communication kernels (including dispatching and combining) to conserve the number of SMs dedicated to communication.

DeepSeek implemented the communication so that as much communication as possible was done on the faster NVLink and as little as possible was done on the slower IB:

To effectively leverage the different bandwidths of IB and NVLink, we limit each token to be dispatched to at most 4 nodes, thereby reducing IB traffic. For each token, when its routing decision is made, it will first be transmitted via IB to the GPUs with the same in-node index on its target nodes. Once it reaches the target nodes, we will endeavor to ensure that it is instantaneously forwarded via NVLink to specific GPUs that host their target experts, without being blocked by subsequently arriving tokens

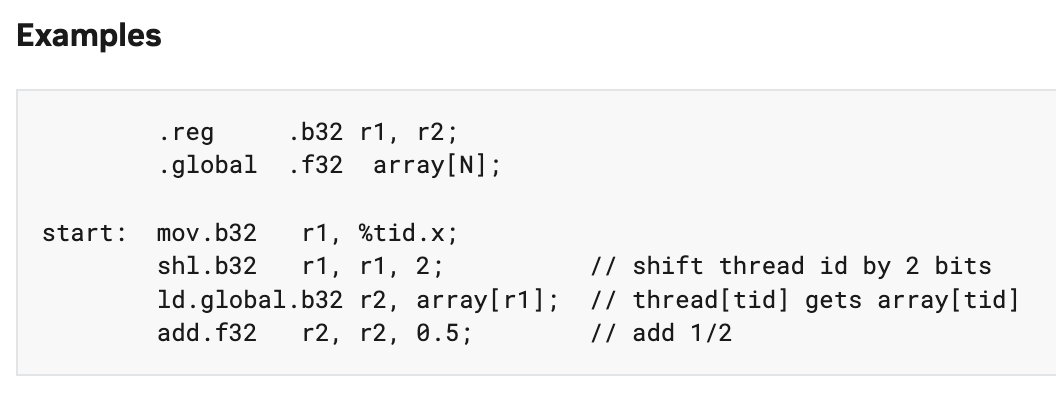

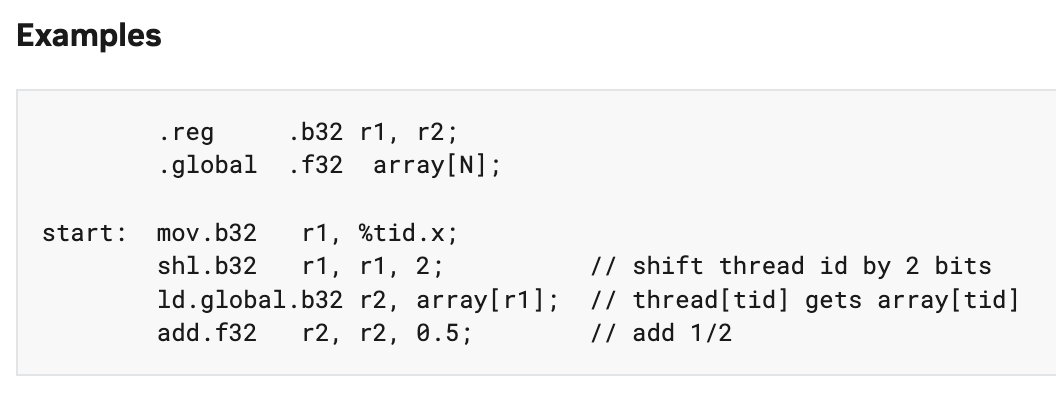

And here’s where it gets awesome. To eke out the absolute best efficiency possible, DeepSeek dove down a layer from CUDA into PTX-land to do some nasty hacking to get this all to work:

Specifically, we employ customized PTX (Parallel Thread Execution) instructions and auto-tune the communication chunk size, which significantly reduces the use of the L2 cache and the interference to other SMs.

PTX: What It Is and Isn’t

PTX, according to Nvidia’s docs (I’ll bold the important bits)

PTX provides a stable programming model and instruction set for general purpose parallel programming. It is designed to be efficient on NVIDIA GPUs supporting the computation features defined by the NVIDIA Tesla architecture. High level language compilers for languages such as CUDA and C/C++ generate PTX instructions, which are optimized for and translated to native target-architecture instructions.

The goals for PTX include the following:

- Provide a stable ISA that spans multiple GPU generations.

- Achieve performance in compiled applications comparable to native GPU performance.

- Provide a machine-independent ISA for C/C++ and other compilers to target.

- Provide a code distribution ISA for application and middleware developers.

- Provide a common source-level ISA for optimizing code generators and translators, which map PTX to specific target machines.

- Facilitate hand-coding of libraries, performance kernels, and architecture tests.

- Provide a scalable programming model that spans GPU sizes from a single unit to many parallel units.

Key points for you dear reader:

- CUDA is a high-level programming interface

- CUDA compiles down to PTX, a stable intermediate representation.

- This stability ensures backward compatibility across Nvidia’s GPU generations, from high-performance Blackwell AI clusters to consumer RTX graphics cards.

It’s an assembly-like language:

Assembly is not fun to write by hand, but it is a great way to squeeze as much performance out of a system as possible. Programmers can directly manipulate registers and memory to speed up program execution and reduce the total memory footprint.

Back in the day, expert assembly programmers were highly valued because compute and memory were constrained; the Intel 8086 could address up to 1MB of memory and ran at speeds up to 10MHz.

Of course, over time, computers got faster and could address more memory, and programmers could move up to higher abstraction levels for easier programming; e.g. from C and C++ to Java and Python.

Why would DeepSeek use PTX? They’re hardware-constrained — trying to get the most out of their H800s with halved interconnect bandwidth requires rolling up their sleeves and diving down below CUDA into PTX.

As the Nvidia docs stated — PTX is for hand-coding of performance kernels

What PTX is not

There was an incorrect take that went viral along the lines of “DeepSeek uses PTX, which means the CUDA moat is dead!”

Uhhh, what?

Again, PTX can be thought of as an Nvidia-specific assembly language, and CUDA is a human-friendly abstraction on top of it. You don’t get one without the other.

PTX is a tool for extreme optimization used only when necessary. And the United States Government made it necessary. But that has nothing to do with the CUDA moat

Suggestions on Hardware Design

Amazingly, DeepSeek’s V3 report gives public feedback to Nvidia and any other AI accelerator vendors listening (including Huawei).

For example, V3 points out how inefficient it is to use 20 of 132 SMs for communication; why not use all 132 SMs for compute and offload the communication to a hardware coprocessor?

We aspire to see future vendors developing hardware that offloads these communication tasks from the valuable computation unit SM, serving as a GPU co-processor or a network co-processor like NVIDIA SHARP Graham et al. (2016). Furthermore, to reduce application programming complexity, we aim for this hardware to unify the IB (scale-out) and NVLink (scale-up) networks from the perspective of the computation units. With this unified interface, computation units can easily accomplish operations such as read, write, multicast, and reduce across the entire IB-NVLink-unified domain via submitting communication requests based on simple primitives.

DeepSeek proposes several additional hardware enhancements in the paper, including improved Tensor Core accumulation precision, fine-grained quantization, optimized online quantization, and streamlined transposed GEMM operations.

These seem useful. I wonder how closely DeepSeek works with Nvidia, Huawei, AMD, and others to share this feedback in real-time?

Lessons Learned

Behind the paywall we’ll walk through six lessons learned from studying DeepSeek’ V3 and earlier model reports from first principles.