Thinking about AI

A few weeks ago, I gave a presentation summarizing my current thoughts and observations related to this AI moment we are observing. The most important caveat here is we are in one of those moments where there are more questions than answers, and all we can do is rely on our experience and historical observations to help as a guide about what may happen.

History as our Guide

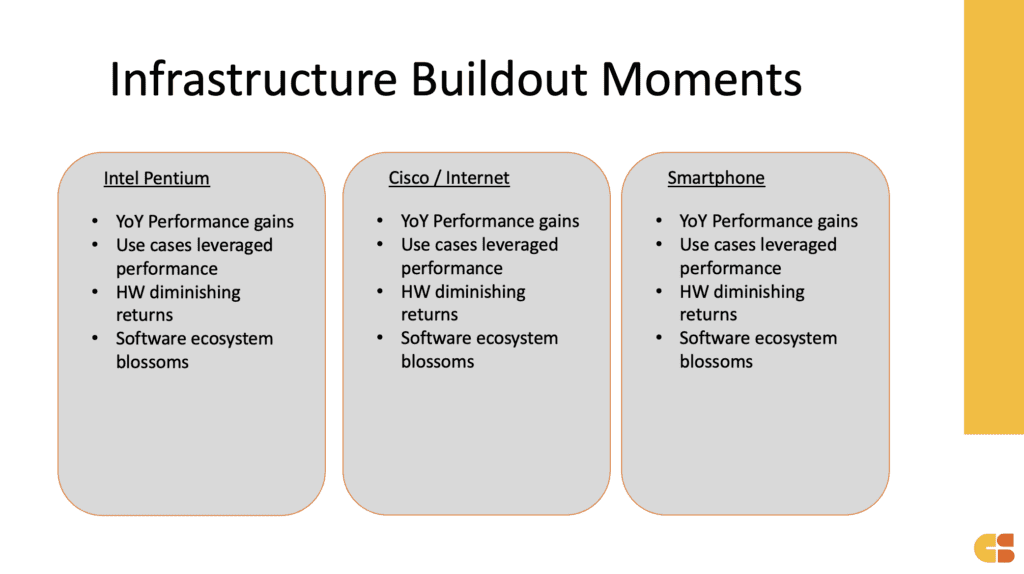

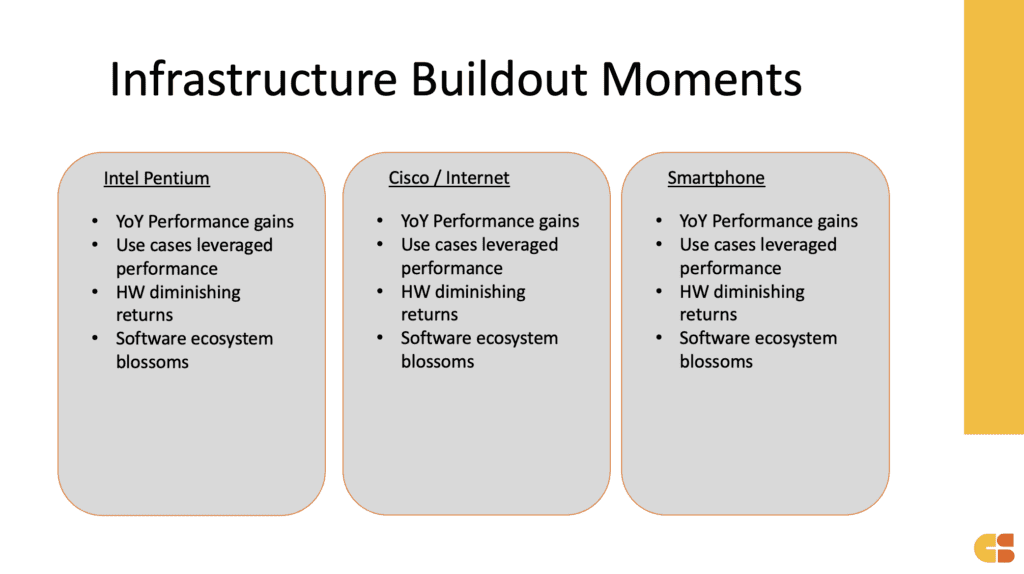

I showed this slide as a historical view of several industry cycles that drove an infrastructure buildout (of compute) and led a software boom. Essentially, there are three main events that shaped the industry as we have it today.

The basic read of this slide is the beginning of the cycle is focused on performance buildout, where it seemingly feels like an arms race for leading performance, and each year’s performance increase nearly immediately doesn’t feel like enough. Think about the early days of Intel Pentium advancements or the early days of the Internet when Cisco could move fast enough to bring us faster Internet year-over-year. The commonality in all of these cycles was each year’s performance gains were met with continued insatiable demand for performance. Until those YoY gains were nominal and essentially if felt like baseline performance was good enough.

We are currently in one of these moments with regard to AI where Nvidia can’t run fast enough to satisfy the insatiable demand for compute around training. Now, whether this is a 12-month cycle or a two-year plus cycle is a key part of this debate, but if history is our guide, there will come a point in time when GPU computing is good enough, and the YoY gains in GPU performance are nominal in terms of end-market demand.

What Makes This Cycle a Little Different

It is worth pointing out something about this cycle feels a little different. And that difference has everything to do with the immense amount of cloud computing, thanks to hyperscalers like Amazon AWS, Microsoft Azure, and Google Cloud Platform. In the prior cycles I mentioned, hardware performance (infrastructure buildout) had to happen first before software could evolve to take advantage of it. Case in point: the early Internet, where we needed a new modem to take advantage of each infrastructure performance upgrade. The same was true in the early PC era, as we needed a faster processor to have a faster software experience.

This cycle is a bit different in that the potential to use all the amazing AI software being developed can run on all existing hardware thanks to cloud computing. Now, we can observe its latency, or its cost, as a current friction point, but billions of people can experience AI today on their current devices. This is why ChatGPT was the fastest diffused technology experience in history. Even if people didn’t stick with using it, 100s of millions experienced it in less than a year. That has never happened before in the history of the industry.

This cycle is different because we have a simultaneous rapid pace of innovation happening with infrastructure buildout and software performance at the exact same time with the potential instantaneous diffusion of software. This is relatively uncharted territory. We are simultaneously moving too fast and not fast enough.

What Happens Next?

For now, demand for training products like GPUs and AI training accelerators will remain in high demand. At some point, most companies will have trained their data models, and even foundational model training itself will reach a point of diminishing returns. When that happens, the performance bars will move from training to inference (querying the data). It is at that point in time that the software ecosystem will blossom even more, and we will see increased innovation in AI software and its underlying capabilities. At that time, large corporations will likely move more of their data on-premise or in hybrid cloud deployment to keep their data more private and secure.

We can say with certainty that this journey we are on with the AI revolution is only just beginning. From what we can quantify and qualify thus far, AI will likely completely change how we interact with technology at a platform level. It will likely be infused into every software experience in some way, shape, or form. There will be periods that feel like a hype cycle and a bubble, but inevitably we will see a rich software cycle be built upon the infrastructure foundations of high-performance computing in both the cloud and at the edge.